Print Files: A4 Size (pdf), Text (txt).

We start with the definition of entropy and information in physics and engineering using the concepts of state and symbol based in the theory of probability applied to space and time respectively. The quantity of information and the level of organization is proposed as the principal characteristic of life. It is calculated the quantity of information in the genetic code and the result is compared with the dimensions of time and space of the universe. Based in those ideas we define a total signal as a function of a mechanical deterministic signal and a vital probabilistic signal. It is proposed a system called AnaSyn doing a mathematical Analysis in the emission side of a communication channel and a mathematical Synthesis in the receiving side. We present the hypothesis that the electro-chemical activity of the neurons of our brain is a kind of symbol of outer reality measuring its quantity of information. We present the hypothesis that the probabilistic laws of quantum physics maybe is a sign of the presence of life within the atoms. We conclude on how information theory born in engineering could make a scientific link between physics and biology.

"The Urantia Book", paragraph 58.2_3: "And yet some of the less imaginative of your mortal mechanists insist on viewing material creation and human evolution as an accident. The Urantia midwayers have assembled over fifty thousand facts of physics and chemistry which they deem to be incompatible with the laws of accidental chance, and which they contend unmistakably demonstrate the presence of intelligent purpose in the material creation. And all of this takes no account of their catalogue of more than one hundred thousand findings outside the domain of physics and chemistry which they maintain prove the presence of mind in the planning, creation, and maintenance of the material cosmos."

The theory of probability together with the concept of state give birth to statistical physics [1] and the definition of entropy. Entropy is a function of the number of equally probable states of a physical system. To count the number of possible states of a physical system we use a mathematical tool of probability theory called combinatory analysis. Suppose for example we have a system of two magnets an each one could be oriented up or down. What is the number of states of orientation possible for the 2 magnets? The number n2 of possible states is 2 possibilities for the first magnet times 2 possibilities for the second, mathematically:

| (1) |

Suppose now that we have another similar physical system with 3 magnets, in this case the number n3 of possible states is:

| (2) |

Now suppose that we unite the two systems above and form a new one with a total of 5 magnets. In this case n5 is given by:

| (3) |

We said that entropy is a function of the number of states of a system. The chosen function is logarithm. Entropy is the logarithm of the number of equally probable states of a physical system. One motive for this choice is that we desire that the entropy of a system made by the union of two others be the sum of the entropy of them. And in fact the entropy of the above system with 5 magnets made by the union of the two others composed by 2 and 3 magnets is given by:

|

As we can see the most right member of this equation is the sum of the entropy of a system of 2 and 3 magnets respectively. In conclusion the mathematical definition of the entropy of a physical system with a certain number of possible equally probable states is:

entropy = logarithm (number of states)

The quantity of information that could be stored in the memory of a digital computer with a certain number of equally probable states is:

information = logarithm (number of states)

The memory of our digital computers is composed by elements that could be in one of two states. Those bistate elements are called binary digits or briefly bits. If we have 8 binary digits together each one with two possible states, the total number n8 of states of this system of 8 bits is:

| (5) |

For numerical simplicity we choose the logarithmic base 2 to calculate precisely the quantity of information in engineering of binary digital computers. So a system with 8 binary digits with equally probable estates is capable to store a certain amount of information given by:

| (6) |

The probability of the event of a state in a system capable of a given number "n" of equally probable possible states is given by:

| (7) |

This implies in the case of equally probable states that:

|

In his memorable paper, Shannon [2], the father of information theory, analyze the quantity of information that we can transmit in a communication channel with a certain condition of noisy. He associate each possible state of a communication channel to a symbol of an alphabet. In this practical case the event of a state or symbol could happen in different probabilities. So with states and symbols that are not equally probable the information "I" transmitted by a symbol with the probability "P" of occurrence is given by:

| (9) |

Information is the logarithm of the inverse of the probability of a symbol. This symbol could be the state of the memory of a computer localized in space. This symbol could be an event in a communication channel that happen in a moment of time. Symbol, state, space. Symbol, event, time. Through the generalization of the concept of symbol we will generalize the concept of information and its theory on section 8.

"The Urantia Book", paragraphs 58.6_2-3: "Although the evolution of vegetable life can be traced into animal life, and though there have been found graduated series of plants and animals which progressively lead up from the most simple to the most complex and advanced organisms, you will not be able to find such connecting links between the great divisions of the animal kingdom nor between the highest of the prehuman animal types and the dawn men of the human races. These so-called `missing links' will forever remain missing, for the simple reason that they never existed."

"From era to era radically new species of animal life arise. They do not evolve as the result of the gradual accumulation of small variations; they appear as full-fledged and new orders of life, and they appear suddenly."

The states and the movements of the living organisms has a little probability to happen naturally in dead matter and consequently those states has a high quantity of information. It is very little the probability that an organism be made suddenly by atoms from dead matter that reunite in a region of space forming all molecules, tissues, organs and the whole body of this organism. According to physical laws it is very little the probability that all atoms of some kilograms of matter, suddenly move in up direction and be raised contrary to the force of gravity. Yet this unprovable movement happens when I raised my little child with my arms. The state of organization and the surprising movements of life is very unprovable to happen naturally from dead matter according to the laws of physics. Little probability implies big quantity of information because:

|

The words organ and organism come from organization. The bodies of the living beings are in a state of high level of organization. This state has little probability to happen from dead matter and because of that the quantity of information of the system is big. Also the engines made by man are a sign of the presence of life and of an intelligent organizer. There is a little probability that the atoms of dead matter suddenly forms a house, a car, a computer an other human's engines. Because it is so unprovable that those engines appeared without a creator, this physical systems are in a state of high level of organization and of quantity of information.

Because of those facts we are presenting in this article the quantity of information, in the state and movements of a system, as a scientific measure of the presence of life. If we see, the energetic and chemical analyses of a physical system, we can not be sure if it is alive or dead. Energy, mass and other physical quantities from physical theories are more applicable to the study of dead bodies. It is important to mention also that information is a quantity without physical dimension. This is so because of two mathematical causes: 1 - the frequency of a state or event necessary to calculate the probability is a pure number; 2 - the logarithmic function of the inverse of probability necessary to calculate the quantity of information, has the property to transform the physical quantities in adimensional ones.

As a practical consequence let us analyze the level of organization and the quantity of information of virus and crystals, that are systems in the boundary of the living and dead kingdom. Some biologists don't even consider virus as a living being because they don't have a lot of characteristics of other organisms. Virus consist only of a genetic molecule unfolded by a "proteic cover". They don't move or grow by itself. They don't have an energetic or chemical metabolism. They reproduce themselves using the structure of other living cells. And they can be crystallized like stones and remain the same for thousands of year. So what is the only characteristic of life in virus? It is the level of organization and the high quantity of information in its genetic code. Because of that we would say that the information carried in the state of matter in virus is one of the only marks of life in this micro system. At the other side, in the dead kingdom, crystals are one of the material substances with great level of organization. This level of organization, and consequently high quantity of information, would imply that crystals have more of the "life mark" them others dead substance. And in fact, each crystals has a characteristic form that multiply itself when put in an adequate amorphous substrate. We even say that crystals are seeds that we plow and that grow up in the substrate. So crystals, the dead substance with greater quantity of information, has a kind of reproducibility characteristic which is a life property. This fact is one more in favor of the idea that the quantity of information is the principal mark of life.

The quantity of information increases with the inverse of the probability of a state in a region of space, and of an event in time. If we analyze the probability of the state of matter localized in a region of the space, in general whenever we calculate a great quantity of information we have find the position of a living organism. The state of organization of the bodies of life is unprovable in a dead matter and, because of that, full of information. In section 5 we will calculate the quantity of information in the human genome. On the other side if we compute the probability of the event or movement in time, we can also detect the presence of life. The dead bodies move in a most predictable way than the living organisms. The surprising movements of life are full of information. We will see in section 6 that the only information in a moving scene are the vital signals that come from life. Mechanical movements of a dead body, determinate fully by a mathematical equation, don't carry any new information. This fact will be used in section 7 to propose a system of communication capable to find and transmit the only real information in a moving scene, that are the vital signals.

"The Urantia Book", paragraph 62.0_1: "ABOUT one million years ago the immediate ancestors of mankind made their appearance by three successive and sudden mutations stemming from early stock of the lemur type of placental mammal. The dominant factors of these early lemurs were derived from the western or later American group of the evolving life plasm. But before establishing the direct line of human ancestry, this strain was reinforced by contributions from the central life implantation evolved in Africa. The eastern life group contributed little or nothing to the actual production of the human species."

We will calculate the number of possible combinations of our genetic code. According to recent research the human genome have near 3.2 billions of nucleic bases. Each one of those bases could be one of four types in the genetic chain. This give a number "N" of possible combinations of:

| (11) |

This number has more than one billion of characters. Only to write it, we would fill more than 400000 pages with 2500 characters in each one of them. Those pages are sufficient to form 4000 books with 100 pages each one. This could fill 100 levels of stacks with 40 books in each. Those levels could compose 10 stacks with 10 levels each one filling a little library with books necessary only to write this big number in decimal notation. In a condition where all those possibles genetic codes are equally probable, the probability "P" to get the unique combination of your genome between all possibilities is:

| (12) |

This little probability and great luck carries a quantity of information "I" given by:

| (13) |

Now let us calculate the number of possible genetic codes that we would have if since the beginning of the universe, we choose at each second new genetic combinations, for a substance with one mol of human genomes by litter, filling the whole present volume of the known universe. It is estimate that the age of the universe is something at the order of 10 billions of years. This time in seconds would give a period of:

| (14) |

Multiplying this time by the speed of light and calculating the volume of the sphere so formed we would have an estimative of the volume of the universe of:

| (15) |

Now imagine that each litter of this whole volume is filled by one mol of human genomes. One mol of this substance will have 6 ·1023 genetic codes. Suppose that since the beginning of the universe at each second each human genome of the above described substance filling the whole volume of the universe "try" a new combination. What is the total number "n" of combinations formed in that way?

|

This number of combinations will be sufficient with luck to achieve the right sequence of a genetic chain with only 204 nucleic bases. But this is near the size of the most little gene of the human genome with a total of 3.2 billions of nucleic bases. The fact is that even if we consider natural selection and combined reproduction the total number of new genomes on earth since life begin is by far much little than the number of combinations in the whole volume of the universe since its beginning in the way calculated above. The obvious conclusion is that: the human genome is so much organized and full of information that it is almost impossible that it comes from chaotic dead matter.

In anterior section we analyze the information calculated based on the probability of the state of combination of our genetic code. The probability of organization of a state in space is used to calculate the entropy of a system in statistical physics. In engineering, the probability of an event in time is used to calculate the quantity of information in a communication channel. The great quantity of information in living organisms appears not only in its great state of organization in space but also in its surprising free movements in time. We will prove in this section that almost all information in a moving system is in life movements that we will call vital signals. From this proof we will propose in the next section a practical system of communication of information.

We will call total signal to any transmissible signal. The total signal is a function of a deterministic signal and a vital signal. The deterministic signal is the part with future values totally determinate by a mathematical equation. The vital signal is the remaining informative part. In mathematical language:

| (17) |

We state that the information of the total signal is in the vital signal because the vital signal is the only surprising, unpredictable, probabilistic or stochastic part of the total. The future values of the deterministic signal is by definition totally determinate by a mathematical equation. So the probability of receive a determinate value of a deterministic signal is always "1". If the probability of a determinate value is "1" the information that it carries is "0" because:

|

There is another way to prove that the information transmitted in a mathematically predictable deterministic signal is zero. Suppose that we want to transmit a video signal of the movements of the sun and the planets of our solar system. Also, suppose that those movements obey with precision the physical equations of classical mechanics [3]. And that we transmit through a communication channel, in a finite interval of time, the equations and the initial values of the movement. Finally suppose that the receiving side with this information about the initial positions and velocities of those celestial bodies and with the knowledge of the equations that describe their movements, calculate all future positions and reconstruct the video signal for an infinite time. If there is any information in a finite duration of the above described video signal it will be possible to transmit an infinite quantity of information in a finite amount of time. The infinite information of the video reconstructed for an infinite time would be transmitted in the finite amount of time necessary to transmit the initial state and the equations of movement of the video signal. The same paradox will happen to all determinate signal, because we can transmit the parameters an equations in a finite amount of time and the receiving side can determinate the future values of all infinite time ahead. The obvious solution to this paradox is conclude that the information in a determinate signal is "0" for a finite and infinite amount of time.

The vital and essential part of a signal is the only informative of the total. By definition the vital signal is the part of the total that could not be determinate exactly by a mathematical equation. Vital signals are probabilistic signals. We choose the term vital because in general life movements are the part of a signal that we can't put in a deterministic and exact mathematical equation. It is important to remember that complex systems also generate probabilistic signals. And we remember also in section 9 that the laws of quantum physics are probabilistic. We mention also that in a certain sense a deterministic mathematical equation is a particular case of a probabilistic one, the case where the probability is one.

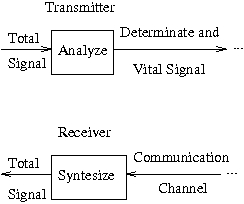

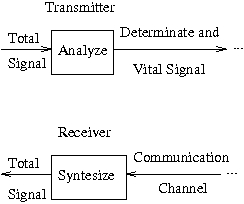

Last section establish the theoretic bases of a communication system called anasyn. In engeneering of communication, MoDem Modulate and Demodulate, CoDec Codify and Decodify and AnaSyn will Analyze and Synthesize the signal in the emitter and receiver side of the communication channel, respectively. In the emitter side anasin will analyze and separate the determinate and the vital parts of the total signal. The mathematical analyze will also verify which analytical equation or transform best describe the total. The result of that analyze gives the mathematical description of the determine part. Extracting the deterministic part, of the total signal, will result in the vital part. The other side of the communication channel receive the equations and parameters of the determinate and the vital signal. Than the anasin synthesize the determinate part and calculate the total signal as a function also of the vital part. This system of communication is shown in the diagram 7:

Obviously the analysis of the signal is not only mathematical but also physical. The knowledge about the physical source, transmission environment and measurement instrument of the signal is important in the definition of the type of equation we should look for in our analyses. For example, if we have a video signal, the determinate part would be a three dimensional model of the environment and the objects of the scene ruled according to the laws of euclidean geometry and of classical physics. The vital part of the signal would be the movements realized by the living beings of the scene. There is a famous game in the Internet called: "Quake", that do this procedure in a certain sense. "Quake" is a fight that occur in different architecture environments, each player is a soldier that moves and see the others participants moving also. The movements go in a surprising quick way even in low speeds of the old Internet. To achieve that quickness, the geometry of all environments, the number, form and uniforms of all players, are know in each side before the game starts. In other words: the determinate part, of the video signal of the game, is known with antecipation. So, only the movements of the living players is transmitted. Those movements of the players are the vital part of the total signal, the part that carries new information and is transmitted as soon as it comes. Suppose now that we are transmitting the speech of a person in a webphone through the Internet. We can imagine that first the anasyn system capture the personal characteristics of the voice of each speaker. Those unchanged equations and parameters of the speakers are the determinate part of the speech signal that is transmitted before the conversation starts. In this case, the vital part may be composed by the recognized characters and words, and by the intonation of the speakers.

We know that this mathematical and physical analysis is not trivial. But in theory we know that there are physical laws that describe in a great extension the movements of dead bodies and systems. We know also that the movements of living organisms are a part of physical reality very complex to fit in exact mathematical equations. Obviously there are also movements of physical systems very complex like the waves in the ocean and the clouds in the sky. There will be cases where the separation between the determinate and the probabilistic signal will not be clear. There is also noise and the limitation of our measure instruments. Some times we will have difficult to find the best mathematical model for a given situation. Those all are open problems of this infant theory of information of vital signals.

To conclude this section let us give an example of an anasyn. Suppose we desire to transmit the video signal of a computer monitor with a static background constituted by a bottom-up blue degrade and the dynamic movements of a pointer controlled by a mouse. The image of the background is the determinate signal and the movements of the pointer is the vital part connected to the hand of the computer's user. The anasyn would first transmit the equation that describe the background. The determinate signal given in this case by:

| (19) |

Where the "lightblue" is the intensity from 0 to 1 of the blue part of a trichromatic pixel of the background image in the monitor. The vertical coordinate of the pixel is the "height" and the maximum value of "height", in the top of the screen in a cartesian system, is the "heightmax". With this background image equation transmitted, we need now only to transmit the future positions of the pointer, in the screen of the computer, to reconstruct the video of the system. The changing positions of the pointer is the vital part of the total image signal. With the anasyn system described above the rate of bits to transmit will be only the necessary to inicially transmit the descriptors of the equation of the background image, and after that, less than 23 bits to transmit one cartesian coordenate of the pointer when it moves. If we desire to transmit this video without any kind of codification at a rate of 20 images per second in a monitor of 800x600 pixels of 24 bits for color in each one we will need 20 ×800 ×600 ×24 = 230,400,000 bits per second to transmit the video. This means that in this case with the anasyn we attain a rate of compression of more than 10,000,000 to 1.

"The Urantia Book", paragraph 42.11_1: "In the evaluation and recognition of mind it should be remembered that the universe is neither mechanical nor magical; it is a creation of mind and a mechanism of law. But while in practical application the laws of nature operate in what seems to be the dual realms of the physical and the spiritual, in reality they are one. The First Source and Center is the primal cause of all materialization and at the same time the first and final Father of all spirits. The Paradise Father appears personally in the extra-Havona universes only as pure energy and pure spirit - as the Thought Adjusters and other similar fragmentations."

In this section we present the hypothesis that the electro-chemical activity of the neurons of our brain is a kind of symbol of outer reality measuring its quantity of information. The adaptation and the logarithmic law, of our sensorial neurons, suggests that we measure information of outer reality with our senses. We show by example that the physical nature of a symbol is not essential for its function. So the electro-chemical activity of our nervous system could be also a kind of symbol of the objects of outer reality. With this generalization of the concept of symbol we amplify the concept of space and time and finally of information. Based on that, the frequency of pulses electro-chemicals in a neuron would be the frequency of occurrence of a symbol of past events in the region of its dendrites and future events in the region of its synapses. The strength of the connections between neurons would be a function of the coefficient of correlation and the conditional probability between the symbols represented by those neurons.

The adaptation and the logarithmic law, of our sensorial neurons, suggests that we measure information of outer reality with our senses. The quantity of information increases with changes on time and space. In our nervous system, adaptation and lateral inhibition increase the frequency, of neuronal electro-chemical activity, with changes on time and space. Adaptation is the characteristic of the majority of our sensory neurons, of decrease the frequency, of its electro-chemical pulses, when the physical stimulus stabilize in a certain level. When a new physical stimulus reach our senses, there is an increase of the frequency of pulses in the sensorial neurons. If the stimulus persist unchanged, the frequency decreases adapting the nervous system to its invariant situation. Because of that we adapt and acostume to smells and tactile stimulus whenever we persist in the same environment. In other words, the frequency of electro-chemical pulses in our sensory neurons increase when there is a raising change in the physical stimulus, and decrease when there is no change. In the retina of the human eye there is a neurological circuit, called lateral inhibition, that increases the fruequency of pulses of the neurons when it detects changes in the intensity of light in the region of the retina. Each sensory neuron of the retina of the eye is linked to its neighborhood through inhibitory connections. This circuit, called lateral inhibition, has the property to magnify most the borders of a visual object. It magnify the regions of change of the intensity of light in the space of the retina. The eye is very sensible to changes and movements in the visual field. All those facts prove the thesis that our perception, like the quantity of information, increase with changes in time and space.

Also from experimental measurements it is proved that the frequency of the electro-chemical pulses in our sensory neurons is the logarithmic of the intensity of the physical stimulus. In cognitive experiments subjects declare that when the physical stimulus is multiplied by the same quantity they perceive the same additive increase. A geometric change translated into an arithmetic change is also a property of logarithmic function as we saw in equation 4. The logarithm is also the function that we use to measure the quantity of information. In fact, when we want to store an integer quantity as an information in the memory of a computer we use a number of bits of information calculated as the logarithm on the base two of that quantity. The fact that the logarithmic function is present in our sensory nervous system suggest us that we are measuring information with our senses.

The idea, that our neurological activity is a kind of symbol of the exterior objects, also contribute to the thesis that our perception measure the quantity of information of outer reality. This is so, because information is a function of the probability of a symbol. The first difficult to this thesis is that the physical nature of the brain is complete different of the computer and the symbols of a language. Let us analyze the importance of the physical constituents of the symbols. We can symbolize the same object with symbols of complete different physical nature. The same object could be symbolized by the draw of a written word, by the sound of a spoken word, by the electromagnetic waves of a word broadcasted by a radio station and finally by relays, or valves, or transistors, or optical disks, or magnetic tapes in the memory of a computer. This suggest us that the physical nature of the same symbol could be very different, maybe because it is not important to its symbolic function. In fact information is an adimensional quantity without physical dimension. This is because the logarithmic function make adimensional physical quantities. Also the probability of a symbol is calculated with a pure number that is the frequency, in time or space, of that symbol. Those facts motivate us to say that a pure abstract symbol is something without physical substract, that information is something without physical form. The symbols and the information are in a certain sense immaterial like the ideas.

On the other way we will say that our ideas, perceptions and in general the electro-chemical activity of our nervous system is a kind of symbol of the objects of outer reality. This is the key hypothesis in our generalization of the concept of symbol and consequently of information theory. Those ideas was born with computers and its analogies with the brain. In this context, the symbols has the property of existence related to the existence of the symbolized object. In this sense the electro-chemical activity in the auditory nerve is a symbol of the sound because its existence is related to the existence of sound in the cochlea of our ears. And so the electro-chemical activity in our optical nerve is a symbol of light because its existence is related to the existence of light in the retina of our eyes. In general we can say that the electro-chemical activity of all sensory and afferent neurons is a symbol of the outer physical stimulus that caused this activity, because the existence of this activity is caused and related to the physical stimulus. Also we can say that the electro-chemical activity of our motor and efferent neurons are a symbol of the movements of our muscles because those movements are caused and related to this activity. The activity of all nervous system is direct or indirect related to objects of reality. This activity exist as cause, consequence, sometimes simultaneous and always in relation to objects of different physical nature.

The quantity of information is the logarithm of the inverse of the probability of a generalized symbol in generalized space-time. A generalized space is a set of possibles symbols of a system. In a computer, the possible symbols are the states of its memory. In the brain, those symbols are the electro-chemical activity of its neurons. A generalized time is a "compass" that mark the movements and changes of the variable symbols of a system. In a computer, the machine clock marks the changes of its symbols. In the brain, time will pass with the changes in the frequency of the eletro-chemical activity of the simbolizing neurons above mentioned. But if we make the hypothesis, that all categories are simbolized in our brain and its activity is a kind of symbol of reality, the physical space and time would be a particular case of the space-time generalized above, with a special kind of metric and continuity relations between the symbols of states and its movements.

Basically the electro-chemical activity of our neurons consists in pulses of despolarization in its membrane. In the rest state, because of the active transport of íons of sodium and potassium, there is difference, in the concentration of ions, between the cytoplasm and the extracellular environment of the neurons. This causes a difference of voltage of -70 mili-volts between the exterior and interior of the cell. When the difference of potential, through the membrane of the body of the neurons, cross a threshold, some channels in the membrane open. This promote and abrupt diffusion of ions and the despolarization of the membrane. This electro-chemical activity of the celular membrane, propagate from the body of the neuron, through its axons, reaching its synaptic terminations. The global effect is that prolongations of the body of the neuron called dendrites do a kind of addition. When the summed voltage reach a threshold, pulses of despolarization begin to propagate along the axon of the neuron. The frequency of those pulses increases as a function of the intensity of the stimulus. As we have saw before, the electro-chemical activity of the neurons is a kind of symbol of something. This activity is caused by stimulus in the region of the dendrites and as a consequence promote electro-chemical activity in its synaptic terminations. Because of that we say that the frequency of the eletro-chemical pulses in a neuron is the frequency of occurrence of a symbol of past events in the region of its dendrites and future events in the region of its synapses.

This electro-chemical activity is transmitted from neuron to neuron through connections called synapses. There is inhibitory and excitatory synapses. The instant activity of a neuron is more easy to measure than the modifications of its connections because those modifications occur slowly. Yet Kandell [4] proves the hypothesis of Hebb whereby the strength of the synaptic connections increase if it is simultaneous the electro-chemical activity of the neurons post and pre synaptic. It is known also that the number and size of the neuronal connections in old people is bigger than in children. This suggest that memory and learned experience is stored at least in part in the synaptic connections. Also neurobiological experiences shows that if we cut a nerve in a certain region, the anterior neurons die. There are a lot of experiments showing how the simultaneous electro-chemical activity of neurons influence their inter-connections. The contribution of this article is to give a hypothetical probabilistic meaning to the connections between neurons. Because we believe that the frequency of the electro-chemical pulses of a neuron is the frequency of a symbol, we would say that: the strength of the connections between neurons are function of the coefficient of correlation and the conditional probability between the symbols represented by the electro-chemical activity of those neurons.

"The Urantia Book", paragraph 42.7_10: "The first twenty-seven atoms, those containing from one to twenty-seven orbital electrons, are more easy of comprehension than the rest. From twenty-eight upward we encounter more and more of the unpredictability of the supposed presence of the Unqualified Absolute. But some of this electronic unpredictability is due to differential ultimatonic axial revolutionary velocities and to the unexplained `huddling' proclivity of ultimatons. Other influences - physical, electrical, magnetic, and gravitational - also operate to produce variable electronic behavior. Atoms therefore are similar to persons as to predictability. Statisticians may announce laws governing a large number of either atoms or persons but not for a single individual atom or person."

In this section we present the hypothesis that the probabilistic laws of quantum physics may be a sign of the presence of life within the atoms. In the first half of XX century the quantum theory introduced probabilistic laws to describe the movements of atomic particles. Those proved probabilistic laws may be an indication that the atomic world is much more complex than we presently believe. It is possible that there is life and a pattern of "solar system" within the atom, and that those probabilistic laws are a kind of statistic of this complex atomic world.

On XIX century, done to the great success of classical physics, some ones believed that we are living in a deterministic world. For them if was given us the possibility to know the initial position and velocity of all matter bodies of the universe, we can determinate all movements and future state of everything. In fact this happen to a certain extend to the celestial bodies. A natural extension of this way of thought is believe that this happens also in the atomic scale. But a lot of physical experiments like the radiation of black body, the photo electric effect, the hydrogen optical spectrum, the wave properties of the electrons indicate a different reality in the atom. Concepts of quantization of energy and the wave-particle duality of matter and radiation culminate with the famous wave equation of Schroedinger [5] and the probabilistic interpretation of this equation. Particles was described by field equations and the values of the field in a certain position indicate the probability of the particle to be in that position. So the position and momentum, the time and energy and derived physical quantities are given a probabilistic value instead a determinate one.

Those probabilistic laws was proved by more refined experiments that become possible with technological development. But it is an open problem the motive why those probabilistic laws exist. Some one could believe that the atomic particles are like dies of a probabilistic game. Others may believe that the atomic world is so complex as the human society and that the probabilistic laws are a kind of statistic of this integrate complexity. In fact a great number of atomic particles has been described in particle's accelerators. Also, in the theoretical branch of physics, six new compact spacial dimensions, three extended spacial dimensions, and the time, seems to compose a 10 dimensional physics, in the theories of superstrings, that present an unified explanation of the force fields and the particles of the universe. So experimentally and theoretically the complexity of the universe has increased. This complexity may explain the probabilistic observations and laws of the quantic physics.

But as we have seen through this whole article, organization, probability and complexity is a mark of life. Because, the states and movements of living organisms are complex and probabilistic, we called them vital and show in which sense they are full of information. The simple and determinate systems, that are described by exact mathematical equations, hold less information. The states and movements of those systems was called determinate signals. Because, probability and statistical laws are the ones that best describe life states and movements, we are saying here that the probabilistic laws of atomic scale physics may be explained by the presence of life within the atom. To prove this hypothesis it will be necessary to understand the macro comportment of life. The study of the whole universe will be important to understand each individual part of this universe, if in some form the seed of the whole is present in the center of each not-divisible part, each a-tomic1 part of this universal whole. This study may estimulate a more multidisciplinary and holistic aproach in the students of the school of life.